PyVBMC Example 6: Noisy log-likelihood evaluations#

In this notebook, we demonstrate running PyVBMC with noisy evaluations of the log-likelihood. Here we emulate a noisy scenario by adding random Gaussian noise to the log-likelihood. In practice, noisy evaluations often emerge from estimation techniques for simulation-based models, such as Inverse Binomial Sampling (IBS). However the noise estimate is obtained, it is allowed to be (and in practice, often is) heteroskedastic: that is, the amount of noise in the estimate may vary across parameter space.

This notebook is Part 6 of a series of notebooks in which we present various example usages for VBMC with the PyVBMC package. The code used in this example is available as a script here.

import numpy as np

import scipy.stats as scs

from pyvbmc import VBMC, VariationalPosterior

import matplotlib.pyplot as plt

1. Model definition: log-prior, noisy log-likelihood and log-joint#

We use the same toy target function as in Example 1, a broad Rosenbrock’s banana function in \(D = 2\). But this time, we add random heteroskedastic Gaussian noise to the function evaluations.

D = 2 # We'll use a 2-D problem, again for speed

prior_mu = np.zeros(D)

prior_var = 3 * np.ones(D)

LB = np.full((1, D), -np.inf) # Lower bounds

UB = np.full((1, D), np.inf) # Upper bounds

PLB = np.full((1, D), prior_mu - np.sqrt(prior_var)) # Plausible lower bounds

PUB = np.full((1, D), prior_mu + np.sqrt(prior_var)) # Plausible upper

def log_prior(theta):

"""Multivariate normal prior on theta, same as before."""

cov = np.diag(prior_var)

return scs.multivariate_normal(prior_mu, cov).logpdf(theta)

The setup and prior function remain unchanged. For the likelihood, we’ll take the Rosenbrock function and add some simulated noise to it. The noise here is arbitrary, but notice that the amount depends on the parameters \(\theta\), with more noise when \(\theta\) is farther from the origin: It is typical to have noisier estimates in lower-density regions of the posterior.

In the noisy-likelihood setting, the log-joint (or log-likelihood, if the prior is passed to PyVBMC separately) should return a pair of values: the noisy log-density as the first, and an estimate of the standard deviation of the noise as the second.

# log-likelihood (Rosenbrock)

def log_likelihood(theta):

"""D-dimensional Rosenbrock's banana function."""

theta = np.atleast_2d(theta)

n, D = theta.shape

# Standard deviation of synthetic noise:

noise_sd = np.sqrt(1.0 + 0.5 * np.linalg.norm(theta) ** 2)

# Rosenbrock likelihood:

x, y = theta[:, :-1], theta[:, 1:]

base_density = -np.sum((x**2 - y) ** 2 + (x - 1) ** 2 / 100, axis=1)

noisy_estimate = base_density + noise_sd * np.random.normal(size=(n, 1))

return noisy_estimate, noise_sd

Since the log-likelihood function now has two outputs, we cannot directly sum the log-prior and log-likelihood. So our log-joint function looks slightly different. If you choose to pass the log-likelihood and log-prior separately, PyVBMC will handle this part for you.

# Full model:

def log_joint(theta, data=np.ones(D)):

"""log-density of the joint distribution."""

log_p = log_prior(theta)

log_l, noise_est = log_likelihood(theta)

# For the joint, we have to add log densities and carry-through the noise estimate.

return log_p + log_l, noise_est

x0 = np.zeros((1, D)) # Initial point

We omit optimizing x0 to find a good starting point, as previously suggested, because naive optimization would fail badly for a noisy objective anyway. For noisy optimization, consider using BADS or its Python port PyBADS — coming soon!

2. Setting up a noisy VBMC instance#

As a final step, we have to tell PyVBMC that we are working with a noisy likelihood, by turning on the specify_target_noise option. Otherwise PyVBMC will throw an InvalidFuncValue error, indicating that the target function is returning unexpected values.

options = {"specify_target_noise": True}

vbmc = VBMC(

log_joint,

x0,

LB,

UB,

PLB,

PUB,

options=options,

)

np.random.seed(42)

vp, results = vbmc.optimize()

Beginning variational optimization assuming NOISY observations of the log-joint

Iteration f-count Mean[ELBO] Std[ELBO] sKL-iter[q] K[q] Convergence Action

0 10 -1.09 0.67 254282.65 2 inf start warm-up

1 15 -2.73 0.59 4.37 2 inf

2 20 -2.82 0.44 0.02 2 0.964

3 25 -2.72 0.40 0.31 2 7.87

4 30 -2.81 0.33 0.01 2 0.603 end warm-up

5 35 -2.14 0.39 0.21 2 5.82

6 40 -2.22 0.31 0.02 2 0.89

7 45 -1.95 0.26 0.04 5 1.41

8 50 -1.92 0.24 0.01 6 0.492 rotoscale, undo rotoscale

9 55 -1.74 0.24 0.04 9 1.31

10 60 -1.83 0.23 0.01 10 0.583

11 65 -1.93 0.21 0.02 10 0.829

12 70 -1.86 0.20 0.00 10 0.288

13 75 -1.85 0.19 0.00 10 0.211

14 80 -1.79 0.18 0.01 13 0.452

15 85 -1.73 0.18 0.02 16 0.657

16 90 -1.72 0.17 0.00 19 0.204

17 95 -1.67 0.17 0.01 20 0.332

18 100 -1.63 0.17 0.01 19 0.393

19 105 -1.62 0.17 0.01 19 0.336 rotoscale, undo rotoscale

20 110 -1.69 0.16 0.01 20 0.342

21 115 -1.67 0.16 0.00 18 0.25 stable

inf 115 -1.67 0.16 0.01 50 0.25 finalize

Inference terminated: variational solution stable for options.tol_stable_count fcn evaluations.

Estimated ELBO: -1.673 +/-0.157.

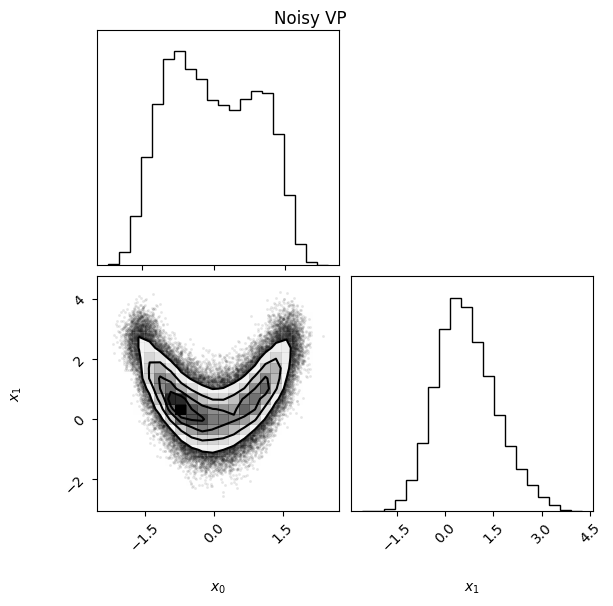

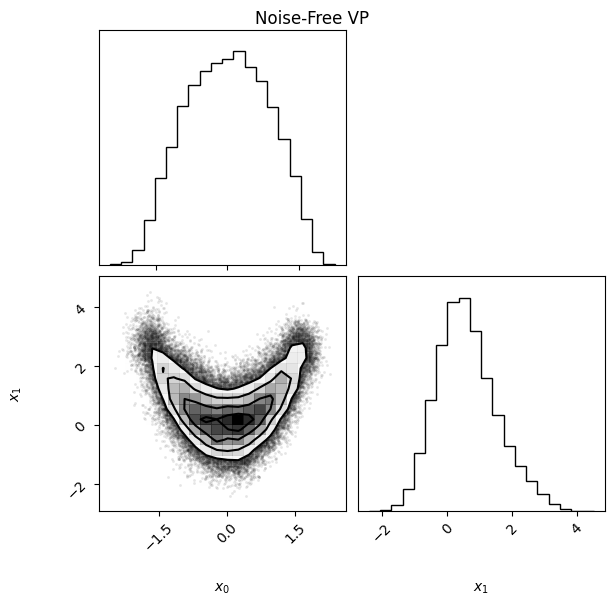

PyVBMC found a solution, even in the presence of noise. Let’s compare to the variational posterior we found in the previous notebook, for the noise-free version of the same problem.

noise_free_vp = VariationalPosterior.load("noise_free_vp.pkl")

# KL divergence between this VP and the noise-free VP:

print(vbmc.vp.kl_div(vp2=noise_free_vp))

[0.02898929 0.02879815]

The KL divergence in both directions is small, and the ELBO is close to what we obtained before. We can also compare the densities visually and see that they are quite similar despite the noisy target:

vp.plot(title="Noisy VP");

noise_free_vp.plot(title="Noise-Free VP");

3. Conclusions#

In this notebook, we have illustrated how to use PyVBMC on a model with a noisy likelihood. Even in the presence of significant noise, PyVBMC can find a good variational solution in relatively few function evaluations.

Acknowledgments#

Work on the PyVBMC package was funded by the Finnish Center for Artificial Intelligence FCAI.